Overview

In the previous article, we saw how to define, implement and run a simple application using Chronicle Services. We saw that a Service is a self-contained component that accepts input from one or more sources, and posts output to a single sink. Input and output are in the form of Events, and the overall architectural approach of Chronicle Services is based on Event-Driven Architecture.

The example used in the earlier article was based on very simple data, accepting input as a pair of numbers and posting output as a single number. In reality, both input and output will consist of more structured data. In this article, we will explore the ideas behind Events in Chronicle Services in more detail, and how to build a service that works with Events carrying structured data.

The Example

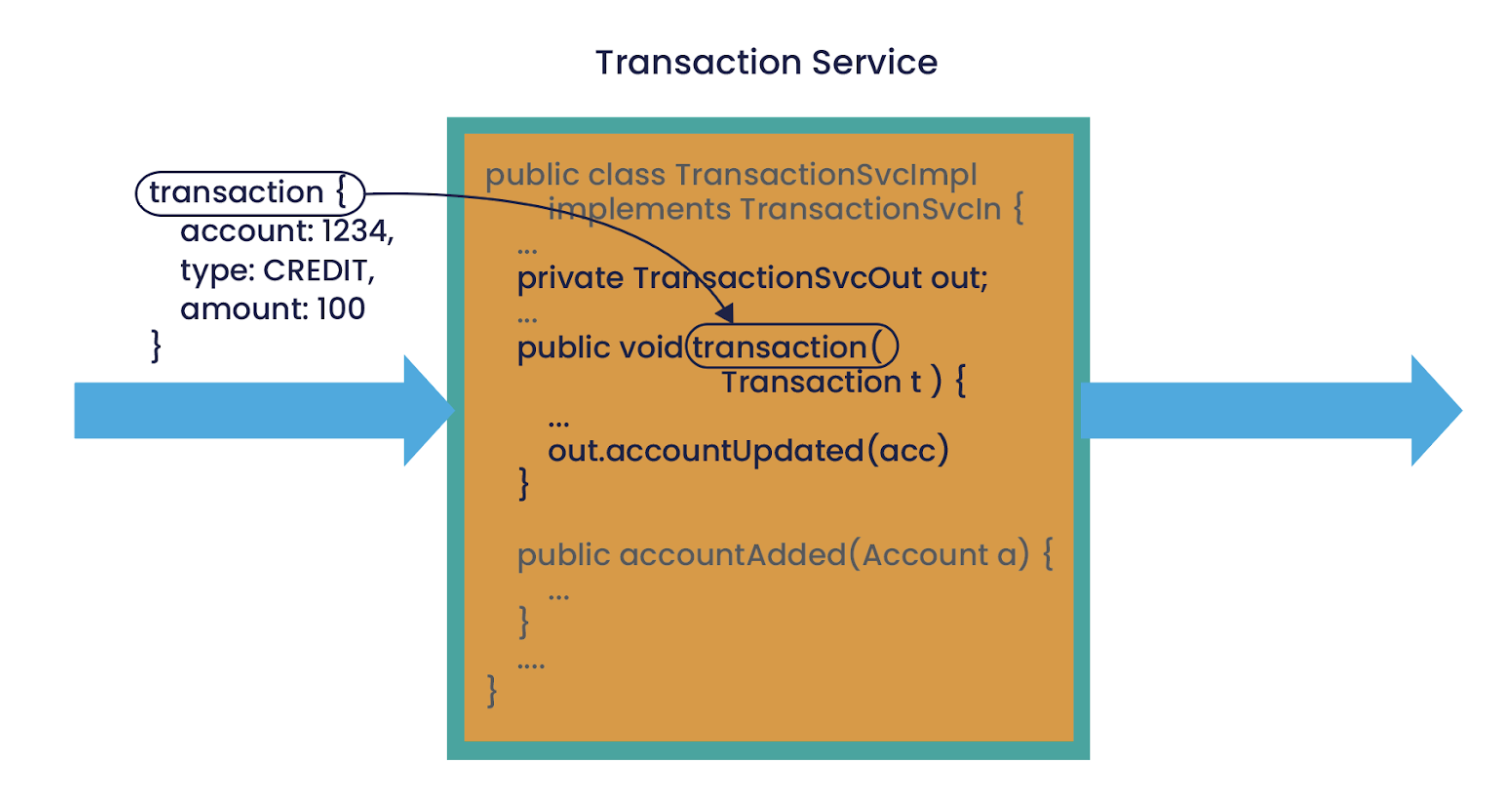

We will discuss an example service that implements the application of a credit/debit transaction to an account. The service posts an event representing updated account information (including balance) once the transaction has been successfully applied. We will not consider error handling here, this will be covered in a later article.

The following diagram shows the service, and its input and output events:

The service is required to maintain details of the accounts to which incoming transactions will be applied, details of how this state can be managed will be covered in later articles. For now, we are going to concentrate on Events being input and output.

What’s in an Event?

In Event-driven systems, an event is defined as an immutable indication that something has happened. Events should represent concepts relative to the domain of a service or application.

Chronicle Services represents Events in data structures with the structure shown below:

The Event id and Event creation timestamp fields are managed and used by the Chronicle Services runtime. In the business logic of a Service, we focus on the Event Data, which is defined as a Java class extending the base type AbstractEvent<E>. The base class adds the Event metadata and provides the hooks that connect to the Chronicle Services runtime event handling and serialisation functionality. An instance of this type is called a Data Transfer Object, or DTO.

As an example, consider the events labelled “transaction” in the diagram. The Event Data for this event is defined as follows:

public class Transaction extends AbstractEvent<Transaction> { private long accountNumber; private double amount; private Entry entry; // … methods }

where the Entry type is defined separately:

public enum Entry { CREDIT, DEBIT; }

No further definitions are necessary for the Event, all management and transmission capabilities are automatically made available through the AbstractEvent<Transaction> base type. This constitutes a significant simplification over other transmission models that require generation of code for serialisation and deserialisation.

The Service API

The public interface of a Chronicle Service is defined by the Events that it is willing to receive, and those that it may post. These are defined in one or more Java interfaces. For example, the input Events for the example service can be defined in the following interface:

public interface TransactionSvcIn { void transaction(Transaction transaction); void accountAdded(Account account); }

Each method represents a “type” of Event, with the parameter representing the DTO, which is the payload of the event.

The output events are defined in a separate interface:

public interface TransactionSvcOut { void accountUpdated(Account value); }

Working with Events

One of the key aspects of performance in any system that interchanges data between components is the mechanism used to ensure the consistency of data being transmitted. Data represented as Java objects must be transformed into a format that is more suitable for sending over some Interprocess Communications medium, and back again at the receiving end.

Conventional approaches in microservice-based applications where HTTP is the most commonly used transport require this format to be text-based, and various encodings such as JSON, YAML or XML are used. However, the serialisation of Java objects to the transmission format for sending, and from the transmission format on receipt introduce significant overhead, in terms of both time and intermediate object creation, which places strain on the JVM’s memory management subsystem.

Chronicle Services uses Chronicle Queue as its default Event transport. Chronicle Queue and the associated Chronicle Wire library define an efficient binary format for the transmission of messages. Additionally, Chronicle Wire provides high-performance transformations of Java objects between their internal JVM representation and this “wire” format. As mentioned above, these transformations are automatically made available to Chronicle Services through the AbstractEvent<E> type, which should be the base type of all DTOs used for Events. No intermediate code-generation phases or additional method implementations are required to achieve this. The actual serialisation and deserialisation of DTOs is performed without the need for intermediate objects, which significantly reduces memory pressure – indeed removing the need for regular minor garbage collections.

Moreover, for diagnostic purposes, Chronicle Wire provides translation from the wire format into a text-based representation of the events, such as JSON or YAML. For example, a “transaction” Event can be seen as:

transaction: { eventId: transaction, eventTime: 2023-08-02T04:11:48.202017908, accountNumber: 45454545, amount: 10, entry: CREDIT }

Notice the event id and timestamp that were added by Chronicle Services, and the remaining Event Data fields as defined in the Transaction DTO class.

Sending and Receiving Events

Chronicle Services, through the highly tuned capabilities of the Chronicle Wire library, makes it straightforward to define how Events are sent and received.

The implementation class for a Service should implement the input Interface(s) that specify its incoming Events. The implementations of the methods in these interfaces become handlers for the incoming events, with Chronicle Services invoking them as soon as an Event of the correct “id” is detected on the input Queue.

So for the example above, the transaction() method is invoked with a parameter that is the decoded Transaction DTO.

For outgoing events, an implementation of the output Interface is created by Chronicle Services and injected into the implementation class at construction time. When a method from the output Interface is invoked through this reference, Chronicle Services will serialise the associated DTO into the wire format and post it to the output Queue. The timestamp is set to the time at which the Event was serialised and posted.

Summary

Chronicle Services is designed to support the building of applications that follow the guidelines of Event Driven Architecture. Loosely coupled components known as Services pass data using Chronicle Queue and related libraries, which have been proved to provide market leading latency figures.

Services are developed with a focus on business logic. Connection to the underlying infrastructure is provided and managed by the Chronicle Services runtime and not through further API calls, which can introduce additional complexity and overhead into applications.