By The Chronicle Team 14th June 2021

Financial trading systems require extremely low latency. In modern markets, financial instrument pricing changes very rapidly, and a client’s profit or loss can be impacted by a delay in pricing awareness down to a few hundred nanoseconds. For this reason, there is a financial benefit in achieving low latency. When streaming a high volume of data to disk, the choice of file system can have a small but still significant impact on the overall application performance.

The Impact of File System on Latency

Benchmarks were run using a typical low-latency trading application stack to measure the effect of different Linux file systems. The following file systems were used:

- ext4: the Linux fourth extended file system (stable 2008)

- ext3: the Linux third extended file system (released 2001)

- ext2: the Linux second extended file system (released 1993)

- xfs: designed for parallel I/O, supported by most versions of Linux (released 1994)

- btrfs: B-tree file system, designed for scaling I/O to available disk space (stable 2013)

- ramfs: an older memory-based file system

- tmpfs: modern memory-based file system on Linux

With the exception of the two in-memory file systems (ramfs and tmpfs) all tests were run using PCIe NVMe SSDs. As a side note, while it is technically possible to set up ext4 and other “standard” file systems to be backed by RAM, this is atypical and also not as efficient as tmpfs so is not covered in this note.

The tests in this study measured the round trip request-response latency through a Chronicle FIX Engine (specifically NewOrderSingle read to ExecutionReport send). The Chronicle FIX Engine uses memory-mapped files for persisting state (via Chronicle Queue), and the variation in overall application performance was measured with the queues mounted on different file systems. Summary statistics were gathered for mean, standard deviation (SD), range, and percentile bins from the 50th to the 99.99th percentile.

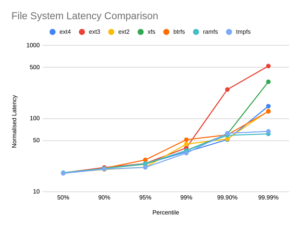

The below plot shows the results for 60-second tests with 1000 FIX messages per second per client, running on a server with four cores dedicated to the FIX engine:

The plot shows file system write latency based on the lowest latency measurement in the testing. Median latency performance is broadly equivalent across all file systems, however clear divergence is seen at the 90th percentile and above (note the logarithmic scale).

The main observations are:

- Minimum: all file systems showed similar minimum numbers

- Percentiles 50% through 95%: the performance of all file systems was similar in this percentile range, with tmpfs having the best performance

- Percentiles 99% through 99.9%: performance was fairly consistent in this range, with ext4 best of the physical disk file systems. ext3 is a significant outlier with about five times the latency of the other formats

- Percentile 99.99%: at this level, the best latencies are achieved when using either of the two in-memory file systems (ramfs or tmpfs). ext4, ext2, and btrfs tail latencies were around double those of tmpfs, while ext3 and xfs both showed appreciably higher tail latencies around an order of magnitude above tmpfs

- Mean: all file systems except for btrfs had similar performance; the mean latency of btrfs was about 60% slower

- Standard Deviation (SD): ext4, ext2, ramfs, and tmpfs had the lowest SDs; the xfs SD was about double, ext3 SD was more than triple, and the btrfs SD was more than two orders of magnitude higher

Chronicle Queue is a popular Open Source project built on the sharing of memory-mapped files between Java processes, providing very fast transfer of data between processes on the same host, thereby offering a low latency IPC (interprocess communication) solution. The core library uses bespoke off-heap memory management which eliminates JVM garbage collection, helping make Chronicle Queue a compelling choice for organizations wishing to persist a stream of events with the lowest possible latency.

Chronicle Queue accomplishes the dual objectives of minimizing real-time latencies while also persisting all data transactions. It can be used with in-memory (volatile) file systems such as tmpfs to minimise data access speeds, or it can be backed with persistent storage such as SSDs to keep a permanent record of every event.

Conclusion

For the standard case where data needs to be persisted to physical disk, our recommendation for best overall latency performance is ext4 on PCIe NVMe SSD.

In cases where persistence is not required, tmpfs provides consistent low latency with well controlled outliers.

In general, an Enterprise platform will require distributed copies of data over multiple machines in order to provide suitable High-Availability and Disaster Recovery (HA/DR) guarantees, and Chronicle-Queue-Enterprise builds on the Chronicle Queue core platform to provide replicated copies of the queue data over a cluster of hosts. With this, the use of tmpfs on any single host can become a more valid option even for HA/DR given multiple copies of the data exist within the wider cluster.

Finally, for some use cases consideration of the underlying capabilities and resilience of a file system may outweigh latency performance. For example Btrfs has several features not available with ext4 (eg snapshots, Copy-on-Write, built-in LVM, ability to span over multiple disks and partitions); on the other hand, ext4, while simpler, has been mainstream for well over a decade and is a proven, highly stable file system.