March 24th 2020

Over the past few weeks we have seen incredible levels of volatility in the markets across all asset classes which has potentially led to many organisations trading systems struggling with the increased capacity. Our design principles at Chronicle ensure that there is always headroom within your infrastructure regardless of the prevailing market conditions.

All of our software is designed around the principle of only 1% to 10% utilisation to ensure consistent latencies, giving significant head room as a result. While 1% utilisation sounds low compared to most computer systems, for low latency systems means your service is 100% utilised, 1% of the time, impacting your 99%ile latencies. Should we experience huge amounts of volatility then there is enough spare capacity to ensure that reasonable performance is maintained even at the outliers.

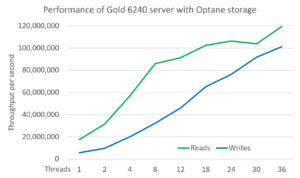

To illustrate this spare capacity we carried out some intensive testing in a Lenovo Lab recording read/write data of Chronicle Queue for a variety of different thread counts. The benchmark is based on the throughput of read/writes of 60 byte messages and gives some insight into how Chronicle Queue is able to handle bursts of messages. As shown in the chart below a single thread was able to handle around 18 million writes per second, this goes all the way up to 36 threads handling 120 million reads and over 100 million writes per second approximately a burst 128 GB data in a second.

Whilst we know that current levels of volatility in any market would not require this type of performance in a single server, this head room is assuring our users that Chronicle software can handle any current capacity and beyond.